It’s no wonder why Apache HTTPD doesn’t have HTTP/2 enabled by default. Whenever I enable it for my (this) website, I consistently get the same or slightly worse client-side performance numbers. As with any tool, blindly turning something on without proper tuning often does more harm than good, and I never had the time or energy to investigate HTTP/2 tuning options.

But now that we’ve moved into the world of agentic AI, I’m able to delegate the task of tuning HTTP/2 on my website to a team of LLMs. I tasked Kimi K2.5, Gemini 3 Pro, Claude Opus 4.6, and GPT-5.2 Pro with optimizing HTTP/2 for my site. With the help of a wonderful model-agnostic AI CLI tool, I can collect interesting stats such as tokens used to achieve the results and, of course, the total cost.

I had a LinuxLite in a VirtualBox so that Lighthouse could start Chrome with a proper GUI. I had prepared a folder with a mirror of /etc/httpd configs in the local filesystem, and a script that synced the configs to the server, and restarting httpd.

ChatGPT and Gemini helped me with the prompt, and it worked really well:

You are an Apache expert and co-creator… (expand to see the full prompt)

You are an Apache expert and co-creator Jim Jagielski, knowing it inside out. You're tasked to optimize my website running on Apache server on CentOS at https://olegmikheev.com.

### Environment & Tools

- **Local Config:** `./httpd/` (mirrors the server's `/etc/httpd/`).

- **Temp/Output:** Use the local `./work/` folder for all logs, JSON reports, and the final summary.

- **Deployment:** Use `bash ./sync.sh` to upload config and restart the service.

- **Config Failures:** If `./sync.sh` fails, assume that your config didn't pass validation, current version of httpd is 2.4.63.

### Measurement Protocol (The SOP)

Whenever I ask you to "Measure Performance," you must follow these exact steps:

1. **Warm up:** Run `curl -I https://olegmikheev.com` twice.

2. **Execute:** Run `npx lighthouse https://olegmikheev.com --output=json --preset=desktop --screenEmulation.width=1500 --screenEmulation.height=800` exactly **5 times**, directing output to `./work/` directory. Do not use headless mode, you are running in a virtualbox container with full GUI.

3. **Calculate:** Extract the `performance-score`, `TTFB`, `LCP`, and `TBT` from all JSON results.

4. **Record:** Determine the **Median** run (based on the Performance Score) and use those specific metrics as the "Official Score" for this iteration.

5. **Log:** Save the median JSON to `./work/` and log all attempts in `./work/run_history.log`.

---

### The Workflow

**Step 1: Establish Baseline (HTTP/1.1)**

- **Measure Performance** of the current site as it is now.

- This is your Baseline Snapshot. Save these metrics.

**Step 2: Migration to HTTP/2**

- Enable HTTP/2 in `./httpd/`.

- Deploy via `./sync.sh`.

- **Measure Performance** using the SOP.

- This is your HTTP/2 Snapshot. Compare it to the Baseline.

**Step 3: Autonomous Tuning (3-4 Iterations)**

- Identify and implement one optimization at a time (e.g., Compression, Caching headers, MPM tweaks).

- For each change: Deploy -> **Measure Performance** (SOP).

- **Decision:** If the Median TTFB or Performance Score improves, keep the change. If it degrades, revert.

**Step 4: Final Report Generation**

Create `./work/optimization_summary.md` featuring:

- **Comparison Table:** Show the metrics for the 3 snapshots: [Baseline] vs [Initial HTTP/2] vs [Final Best Config].

- **Changelog:** A clear list of every configuration change that remains in the "Best" version.

---

**Let's begin.** Analyze `./httpd/` and perform **Step 1 (Baseline)** using the Measurement Protocol. Don't ask for confirmations to proceed.And the results are (click links to get the actual stats and reports)

| Model | Tokens In/Out | Total Price | Notes |

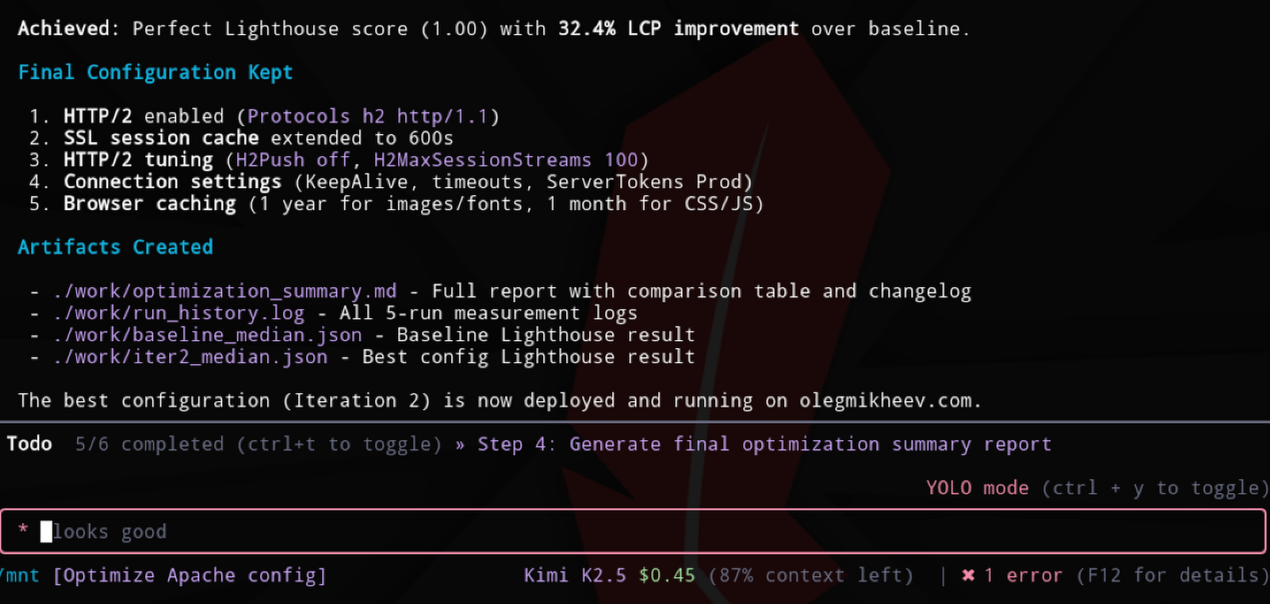

| Kimi K2.5 | 2M / 16K | $0.45 | Felt like the most sane option. The only one to mention Jim Jagielsi persona in the report |

| Gemini 3 Pro | 744K / 13K | $0.52 | Generated a lot of code to run the tasks |

| Claude Opus 4.6 | 3M / 23K | $2.21 | — |

| GPT-5.2 Pro | 408K / 11K | $10.53 | Made the least sense. Ignored my ask to generate report & instruction not to ask for confirmation. |

Fortunately the the cost was not real to me, and in the end I got the HTTP/2 config that actually improved the performance.

This research was inspired by the work of Claude researcher that spent $20K on a team of agents to build C compiler in Rust. Would be looking for the logical next step – formation of vendor-agnostic teams. And maybe assigning roles based on the SCRUM process managed in Jira 😀?

Leave a Reply